At a Glance

- AI-generated images and videos spread within days of 2026’s first major news event

- Fake ICE shooting image and Maduro arrest photos duped millions online

- Why it matters: Experts warn we’re near the point where any photo or clip can be faked, forcing everyone to doubt what they see

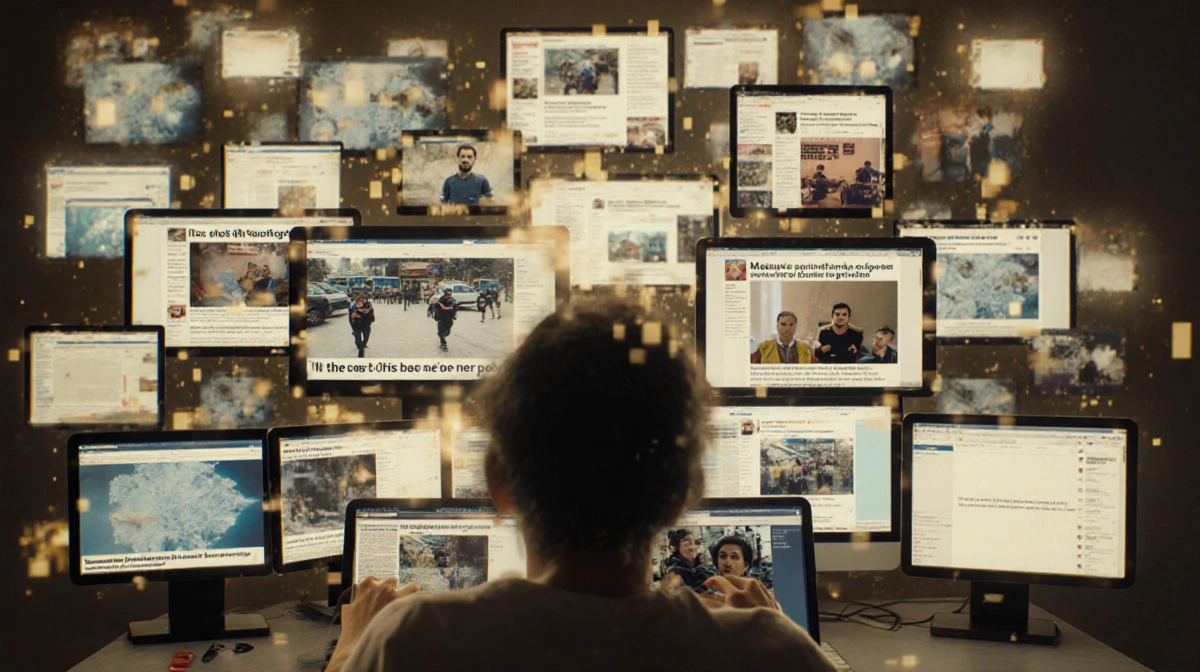

The first week of 2026 delivered a sobering milestone: viral footage and photos from President Trump’s Venezuela operation turned out to be AI fabrications, exposing how quickly synthetic media now hijacks fast-moving news.

The Venezuela Operation: A Case Study in Chaos

Within hours of Trump posting a photo of a handcuffed Nicolás Maduro aboard a Navy ship, AI-generated videos of Venezuelans cheering the arrest flooded X and other platforms. Elon Musk shared one such clip, amplifying its reach before its artificial origin was clear.

- A fake image of an ICE officer shooting a woman in her car circulated widely

- AI edits attempted to unmask the officer involved

- Real celebrations merged with synthetic content, blurring truth for viewers

Jeff Hancock, founding director of the Stanford Social Media Lab, says fast-breaking events are fertile ground for forgeries:

> “For a while people are really going to not trust things they see in digital spaces.”

From Courtrooms to Your Feed: No One Is Immune

AI deepfakes have already surfaced in court evidence. Late last year, Ukrainian soldiers appeared in AI-generated clips apologizing to Russia and surrendering en masse-footage initially treated as genuine by some officials.

| Detection Method | Current Reliability |

|---|---|

| Counting fingers | Rapidly obsolete |

| Visual artifacts | Diminishing fast |

| Source checking | Still effective |

Hany Farid, UC Berkeley computer scientist, found people mislabel real content as fake almost as often as they fall for synthetic media. Political material worsens the confusion:

> “When I send you something that conforms to your worldview, you want to believe it.”

Cognitive Overload Fuels Disengagement

Renee Hobbs, University of Rhode Island communication professor, warns that constant skepticism exhausts users. The logical response is to stop caring whether content is real, undermining public motivation to seek truth.

Efforts to fight back include:

- OECD plans a global AI literacy test for 15-year-olds by 2029

- Instagram head Adam Mosseri urges users to weigh who shares content and why

- Open-source tools like DeepFake-o-meter let anyone upload media for analysis

Siwei Lyu, University at Buffalo professor, recommends simple habits:

> “Common awareness and common sense are the most important protection measures we have.”

Key Takeaways

- AI advances now make any image or video suspect within hours of major news

- Traditional cues like blurry edges or extra fingers no longer expose fakes

- Confirmation bias means people accept media that fits their politics and flag real content that doesn’t

- Experts advise focusing on the source’s credibility rather than the media itself

- Media literacy education worldwide is scrambling to catch up

The Venezuela episode signals a new normal: seeing is no longer believing, and everyone must treat viral visuals with caution.