> At a Glance

> – Hours after a masked ICE agent fatally shot Renee Nicole Good in Minneapolis, AI-doctored images purporting to show his unmasked face flooded social media

> – The altered pictures racked up millions of views and falsely named real people, including the CEO of the Minnesota Star Tribune

> – Why it matters: The rapid spread of synthetic “evidence” undermines accurate identification and endangers wrongly accused individuals

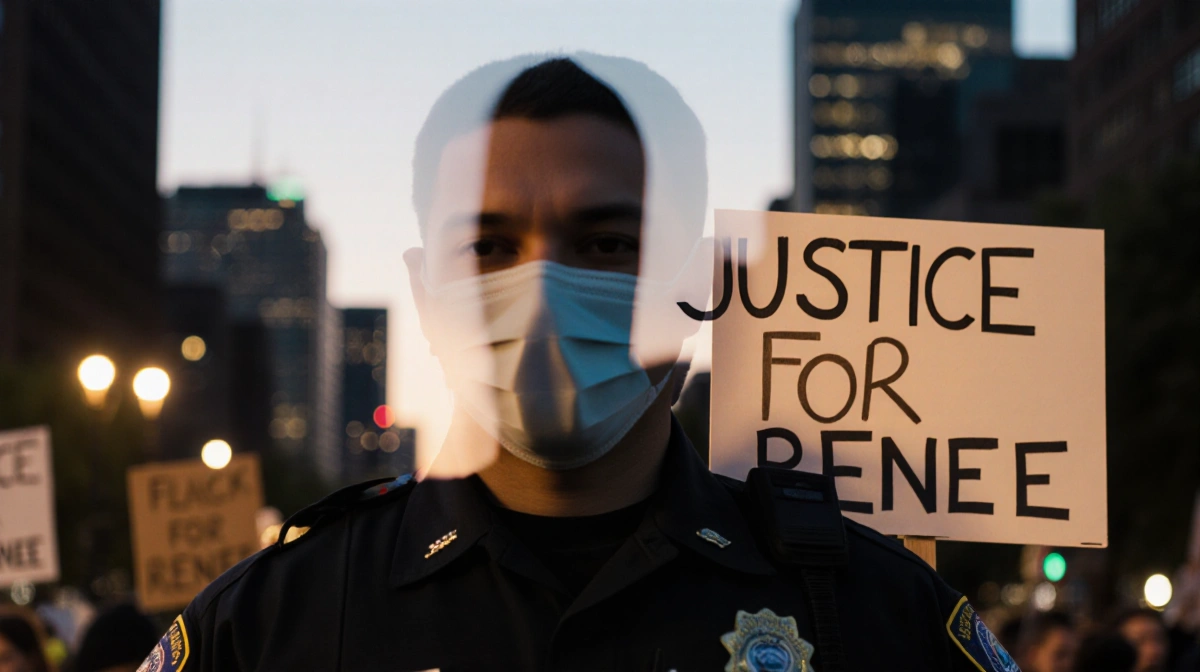

A fatal Wednesday-morning shooting by a masked federal officer in Minneapolis has triggered a wave of AI-altered images that claim to reveal the agent’s identity, racking up millions of views despite having no basis in fact.

How the Fake Images Spread

Video from the scene shows three masked ICE agents surrounding an SUV; when the driver begins to move the vehicle, one agent fires, killing Renee Nicole Good, 37. None of the footage captures the officers’ faces, yet within hours multiple platforms were awash with synthetic stills appearing to show one agent unmasked.

The posts are accumulating huge engagement:

- An X post by Claude Taylor featuring an AI “unmasked” photo has topped 1.2 million views

- A Threads account demanded the agent’s home address under the altered image, collecting 3,500 likes

- Versions appeared on Facebook, Instagram, TikTok, BlueSky and beyond

Expert Warning on AI Reliability

Hany Farid, a UC-Berkeley researcher who studies AI image enhancement, cautions that these tools fabricate facial details.

> “AI-powered enhancement has a tendency to hallucinate facial details … an enhanced image … may be devoid of reality with respect to biometric identification.”

He adds that with half the face obscured in the original video, no automated method can reliably reconstruct true identity.

Misidentification Fallout

Alongside the fake photos, posters have publicly guessed at names. WIRED confirmed that at least two floated names are not linked to ICE personnel, and one high-profile mis-identification targeted Steve Grove, publisher and CEO of the Minnesota Star Tribune.

Chris Iles, vice president of communications at the newspaper, tells News Of Fort Worth:

> “We are currently monitoring a coordinated online disinformation campaign incorrectly identifying the ICE agent … the ICE agent has no known affiliation with the Minnesota Star Tribune and is certainly not our publisher and CEO Steve Grove.”

A parallel case surfaced in September when AI-generated imagery wrongly depicted a suspect in the killing of Charlie Kirk; the actual arrestee looked nothing like the synthetic face circulated online.

Key Takeaways

- AI-edited stills from the Minneapolis shooting scene are fabricating an officer’s face

- Millions have viewed and shared the content, prompting false accusations

- Experts stress that partial facial data cannot be reliably reconstructed

- Authorities have confirmed only that the agent works for ICE; no official identification has been released

As synthetic media tools proliferate, the episode underscores how quickly manufactured “proof” can eclipse verified facts in the wake of high-profile incidents.